Abstract

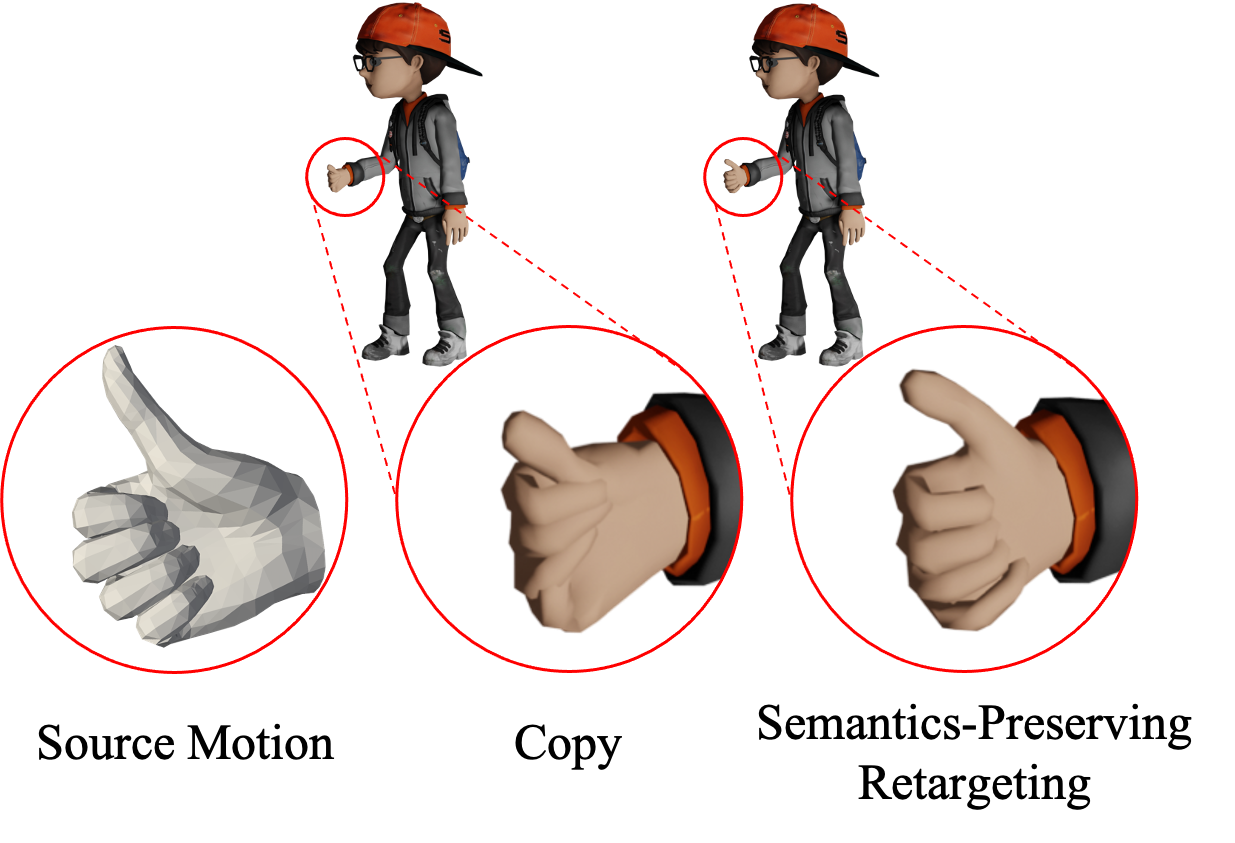

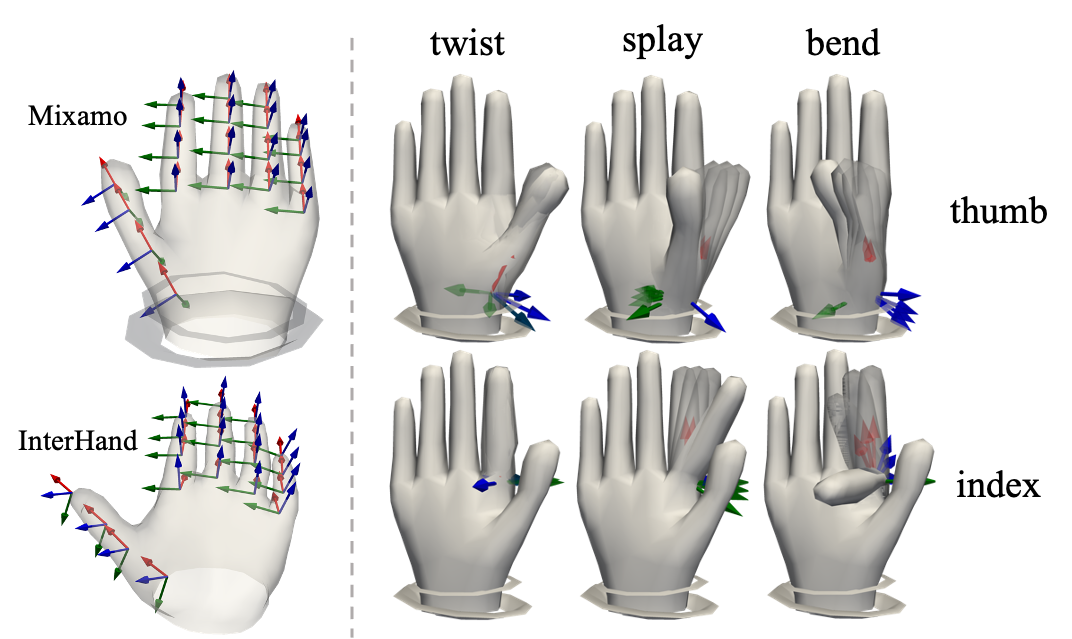

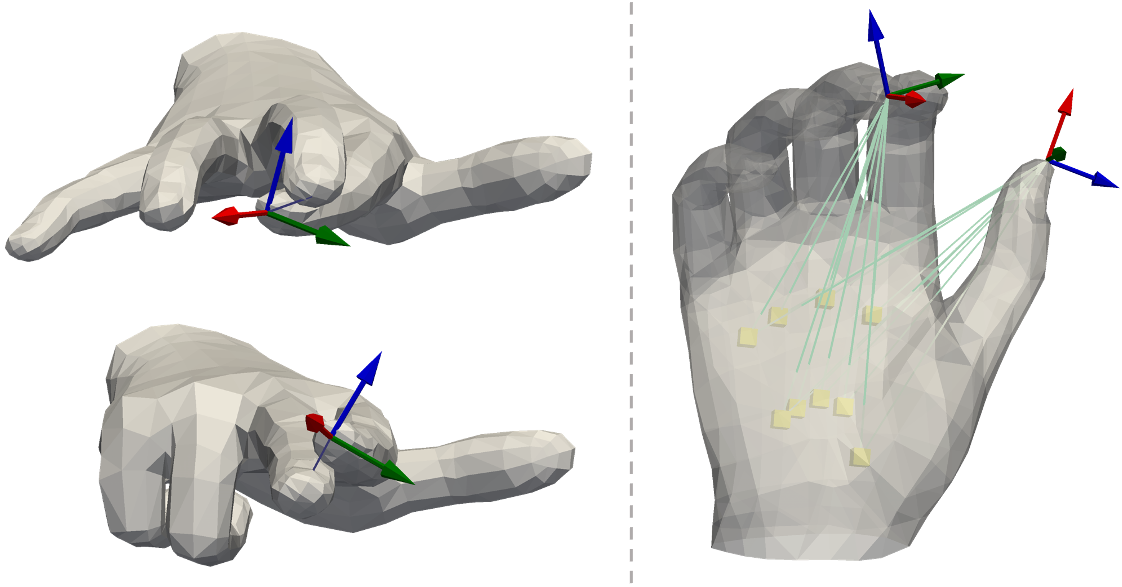

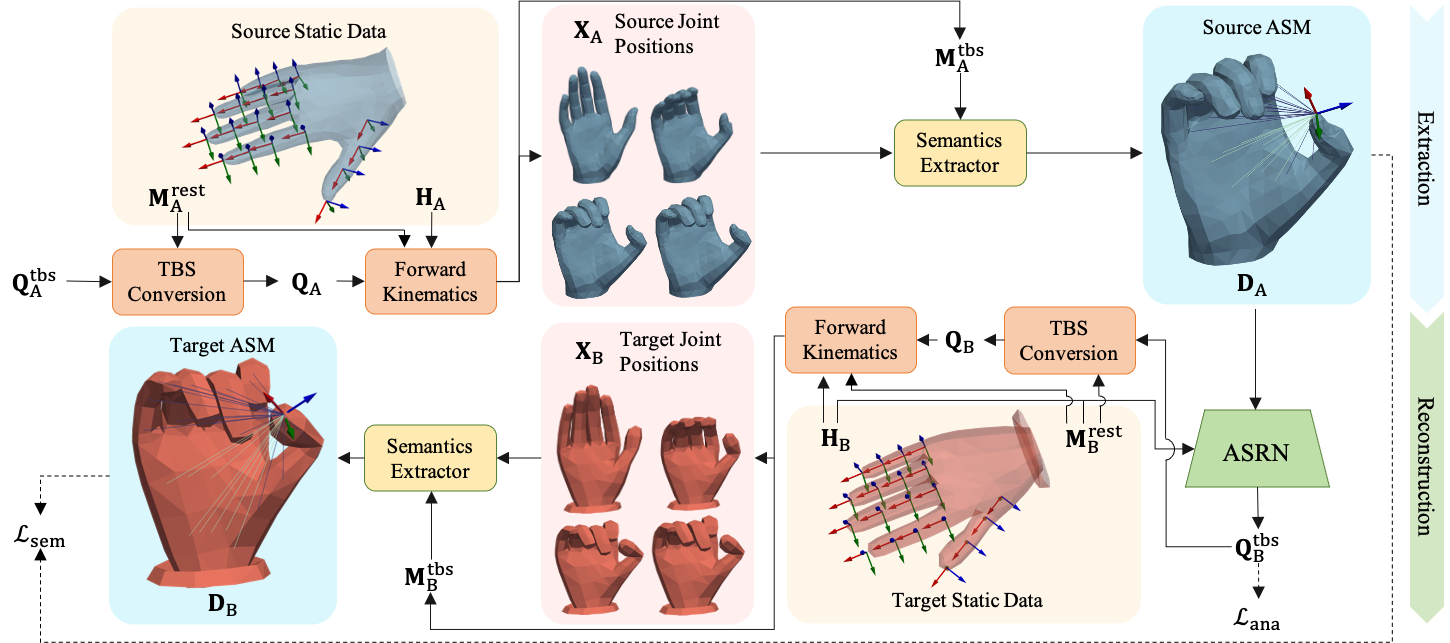

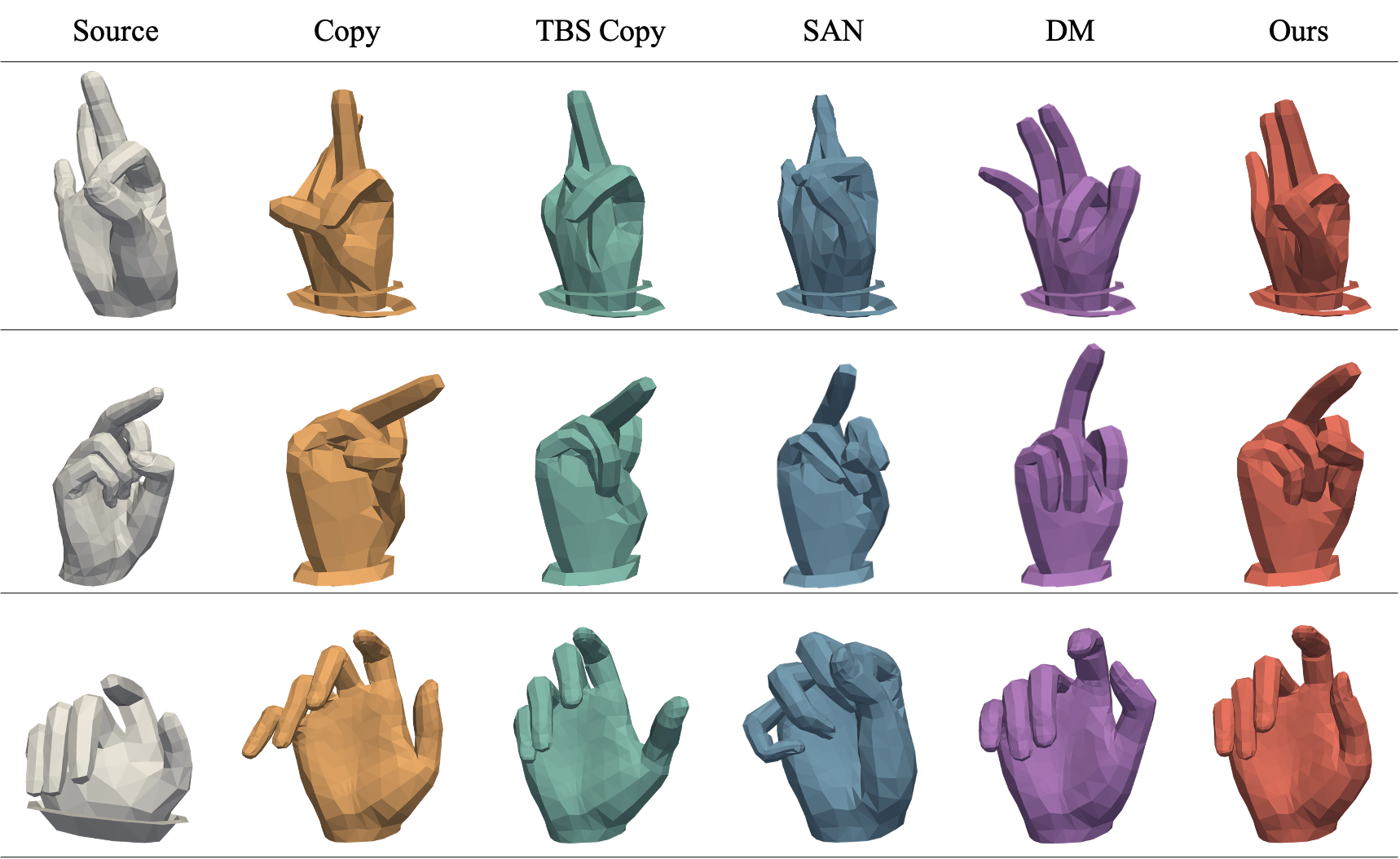

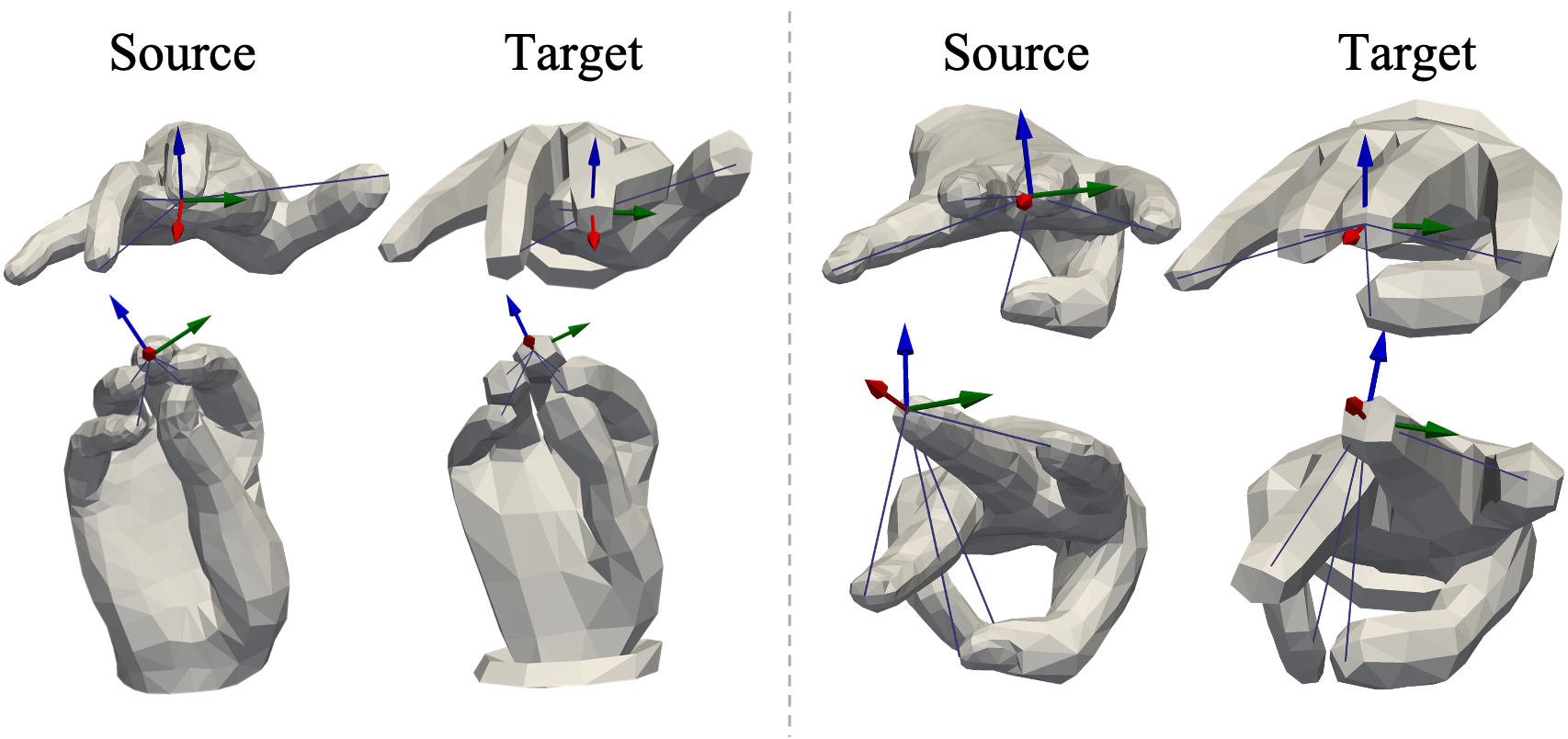

Human hands, the primary means of non-verbal communication, convey intricate semantics in various scenarios. Due to the high sensitivity of individuals to hand motions, even minor errors in hand motions can significantly impact the user experience. Real applications often involve multiple avatars with varying hand shapes, highlighting the importance of maintaining the intricate semantics of hand motions across the avatars. Therefore, this paper aims to transfer the hand motion semantics between diverse avatars based on their respective hand models. To address this problem, we introduce a novel anatomy-based semantic matrix (ASM) that encodes the semantics of hand motions. The ASM quantifies the positions of the palm and other joints relative to the local frame of the corresponding joint, enabling precise retargeting of hand motions. Subsequently, we obtain a mapping function from the source ASM to the target hand joint rotations by employing an anatomy-based semantics reconstruction network (ASRN). We train the ASRN using a semi-supervised learning strategy on the Mixamo and InterHand2.6M datasets. We evaluate our method in intra-domain and cross-domain hand motion retargeting tasks. The qualitative and quantitative results demonstrate the significant superiority of our ASRN over the state-of-the-arts.