|

Zijie Ye I'm a 5th-year PhD candidate at Department of Computer Science and Technology (DCST), Tsinghua University. My advisor is Prof. Jia Jia. I received my B.Eng. degree from DCST, Tsinghua University in 2020. I am working on 3D Human Motion Generation & Processing. I am actively looking for full-time positions. Please send an email if you are interested. |

|

Selected Publications |

|

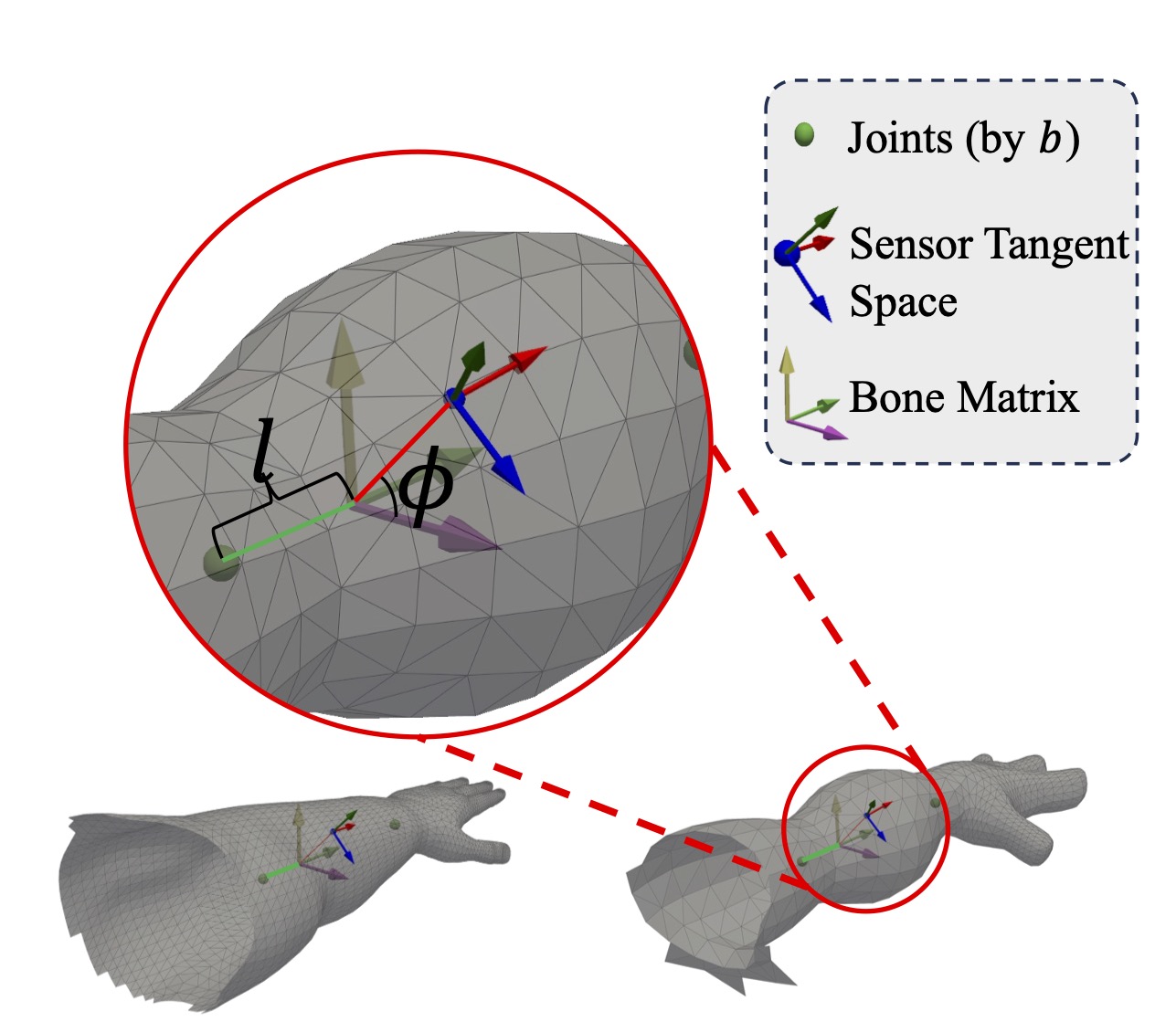

Skinned Motion Retargeting with Dense Geometric Interaction Perception

Zijie Ye, Jia-Wei Liu, Jia Jia, Shikun Sun, Mike Zheng Shou NeurIPS, Spotlight, 2024 PDF / Project Page / Code We introduce MeshRet, a pioneering solution that facilitates geometric interaction-aware motion retargeting across varied mesh topologies in a single pass. We present the SCS and the novel DMI field to guide the training of MeshRet, effectively encapsulating both contact and non-contact interaction semantics. |

|

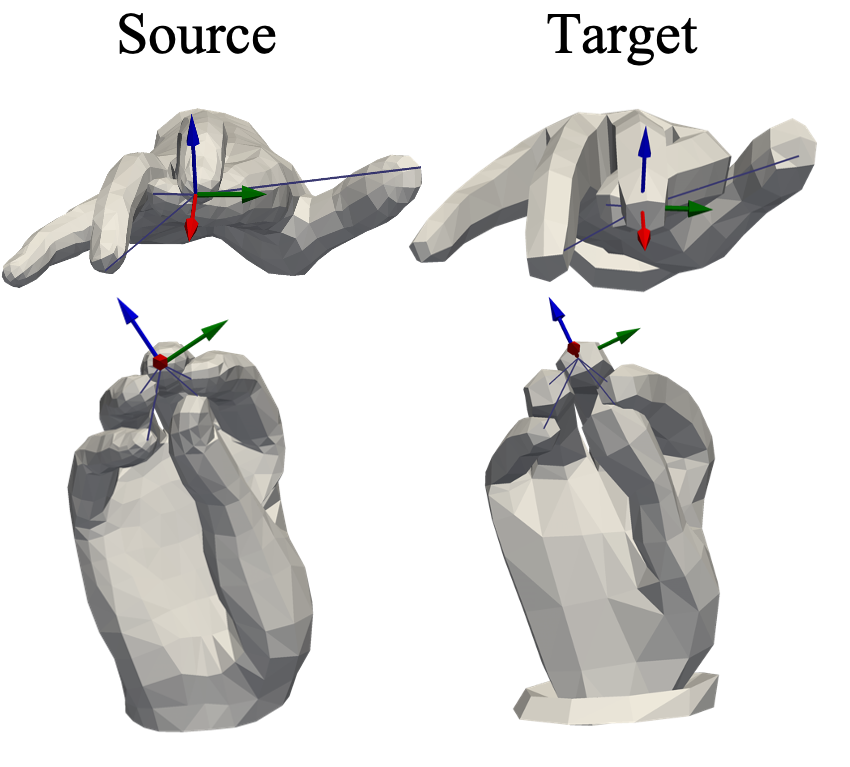

Semantics2Hands: Transferring Hand Motion Semantics between Avatars

Zijie Ye, Jia Jia, Junliang Xing ACM MM Oral, Brave New Idead Award 2023 PDF / Project Page / arXiv / Code Given a source hand motion and a target hand model, our method can retarget realistic hand motions with high fidelity to the target while preserving intricate motion semantics. |

|

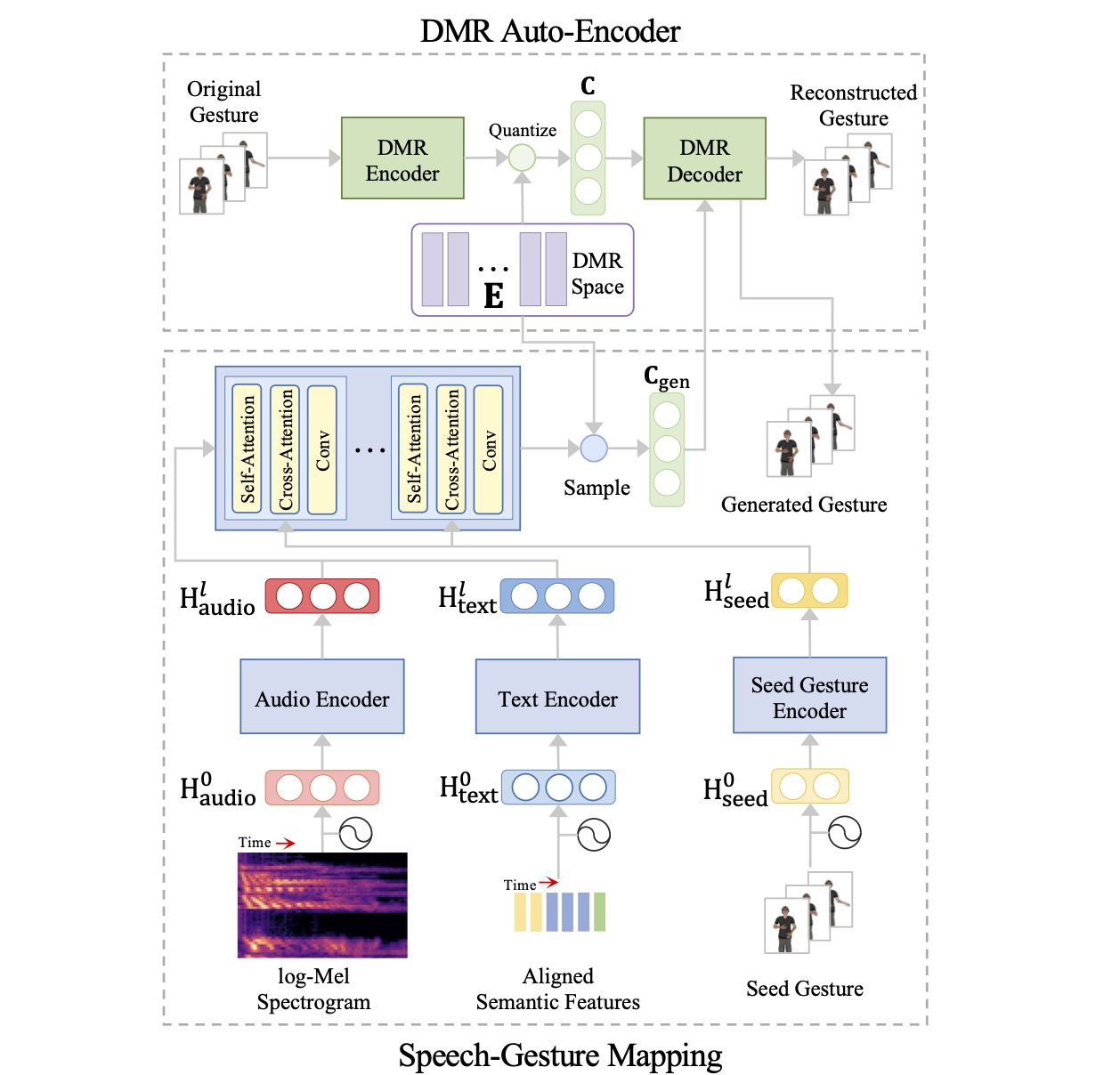

Salient Co-Speech Gesture Synthesizing with Discrete Motion Representation

Zijie Ye, Jia Jia, Haozhe Wu, Shuo Huang, Shikun Sun, Junliang Xing ICASSP, 2023 PDF / Code We propose to synthesize co-speech gestures using discrete motion representation (DMR). By learning a DMR space for gesture motions and modeling the distribution of DMR, our approach generates more high-quality salient motions. |

|

Human motion modeling with deep learning: A survey

Zijie Ye, Haozhe Wu, Jia Jia AI Open, 2022 Paper We present a comprehensive survey of recent human motion modeling researches with deep learning techniques. |

|

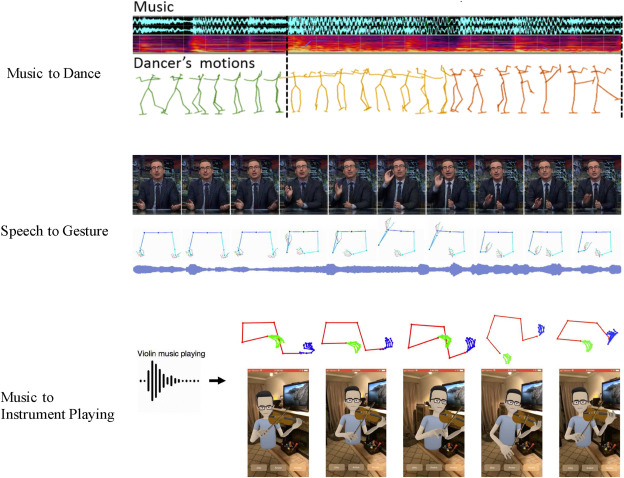

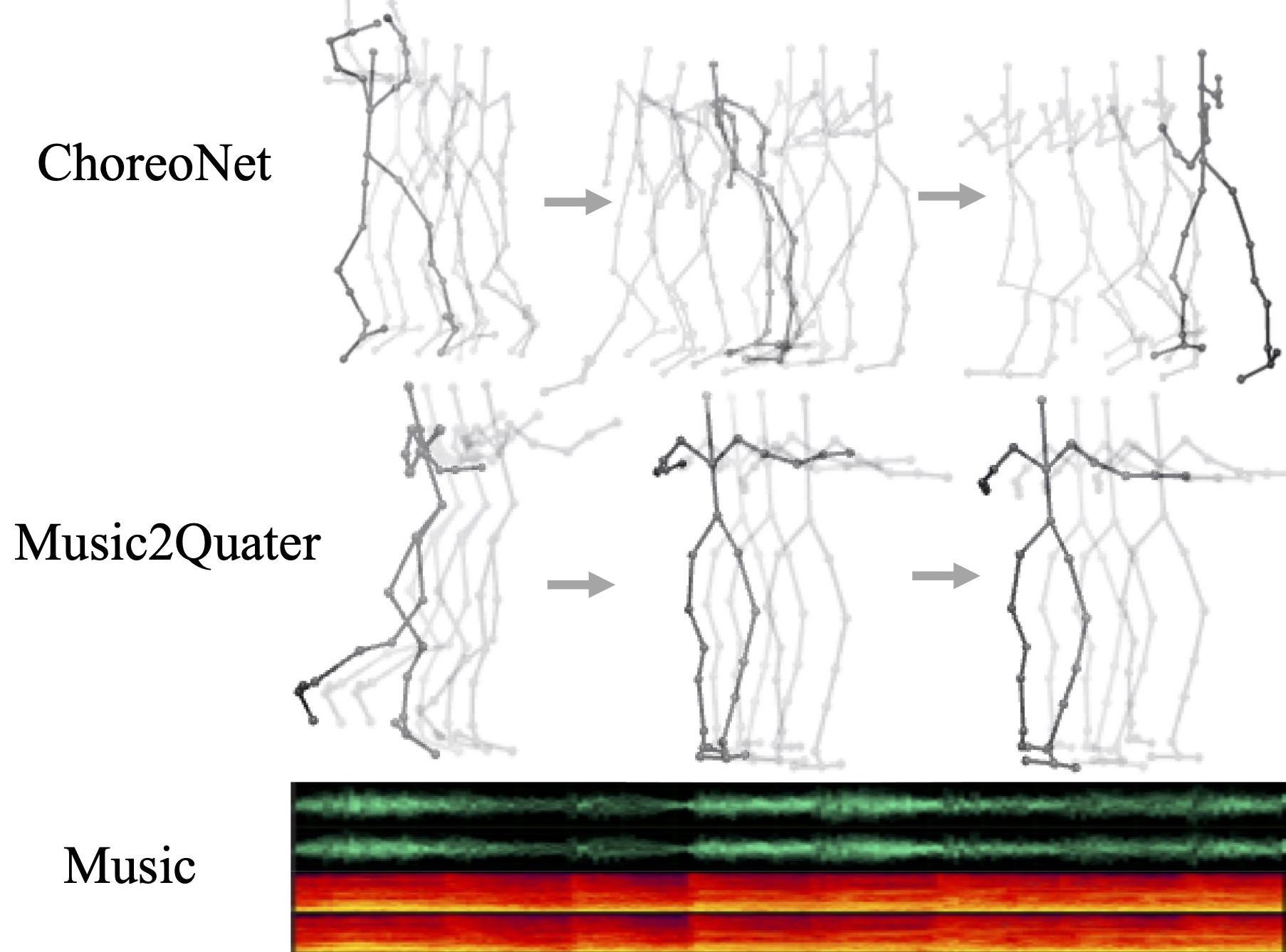

ChoreoNet: Towards Music to Dance Synthesis with Choreographic Action Unit

Zijie Ye, Haozhe Wu, Jia Jia, Yaohua Bu, Wei Chen, Fanbo Meng, Yanfeng Wang ACM MM Oral, 2020 PDF / arXiv We propose to formalize the human choreography knowledge by defining CAU and introduce it into music-to-dance synthesis. We propose a two-stage framework ChoreoNet to implement the music-CAU-skeleton mapping. Experiments demonstrate the effectiveness of our meth |

Misc |

|

|

Based on Jon Barron's website.

|